Last updated on September 6th, 2024 at 08:56 am

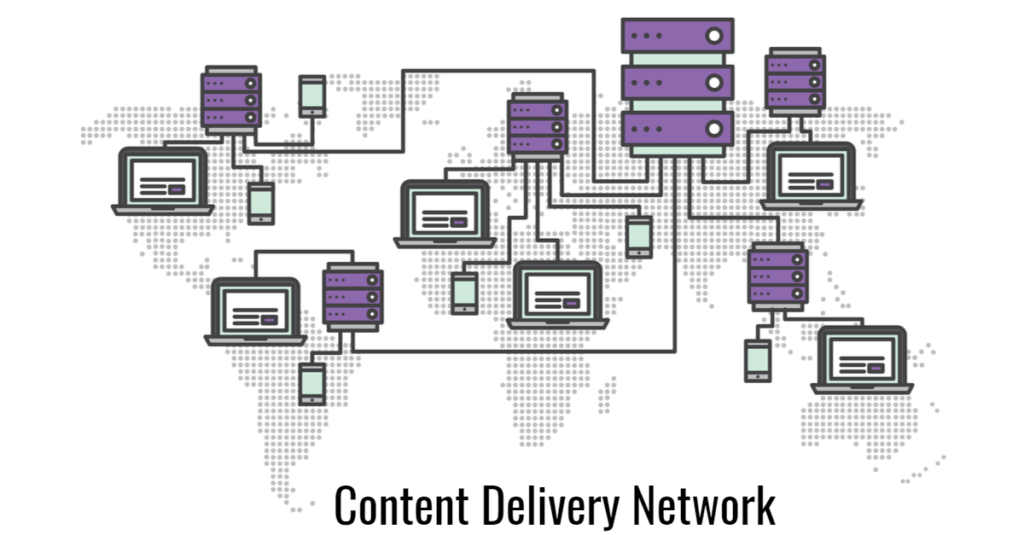

Content Delivery Network – CDN primary goal is to deliver data to the end-user with high availability and high performance. The CDN network accomplishes this by hosting the data as close to the end-user as possible. This is achieved via a geographical distribution of POPs (Point of Presence – Locations where the CDN network has a presence/a node) across the world in strategic locations.

This post is authored by Sindhura Gade who is a Software Engineer and a big data Enthusiast and was reviewed by Anup Gautam a Solution Architect at Akamai. This post is part of the collaborative blogging platform initiative by Mario Gerard.

What is a CDN and Why do we need it?

In simple terms, retrieving data/content on the internet will be faster if copies of the data are made available on multiple servers at multiple locations. For example, if a user situated in Italy is visiting a website that is hosted in California, he/she would experience latency (delay in website response/page loading) due to the data being retrieved over such a long distance, i.e. from a server that is so far away.

To address this physical disadvantage, more servers/nodes can be set up and logically placed at physical locations, to store copies of the website’s data such as images, javascript, CSS etc. Henceforth, the user’s requests will be processed by the server that is situated at the closest physical location, reducing the turn-around time and enhancing the user experience. This network of multiple servers that are globally distributed and aim to provide a common set of data to users based on the user location, is a Content Delivery Network – CDN.

This is why CDNs are otherwise known as Edge cache because they are at the closest possible edge to the customer which in turn provides the end-user with the data he/she is looking for with the lowest possible latency. Locations are carefully handpicked depending on the concentration of users and their data usage patterns.

The most popular use case for CDNs is media files – be it music, pictures, or video; and the reason for this is because of sending media over long distances from your home datacenter in Chicago to say the end-user who is in Japan is going to be extremely time-consuming. With the strive for excellence and to provide a better customer experience, CDN makes consuming large chunks of data much faster for the end-user. Though CDN originally was used only for media files there has been an increased adoption to even store HTML, CSS, javascript files, and stylesheets. This way you could have your entire static content show up faster at the user’s location simply by using Content Delivery Network – CDN.

CDN can provide several advantages :

- Enhanced end-user experience

- Improved download speeds and accelerated website performance

- Lesser site crashes – as the processing is decentralized and the load is distributed

Note that a CDN could be beneficial for websites based on several factors such as site optimization, traffic, visitor count, geographical relevance, cost, etc.

For websites with a global/non-regional end-user presence with heavy traffic, a CDN might seem like an obvious choice. However, the first approach to increase the speed of the website should be to optimize the site, I.e Caching, optimizing the database, Performance tuning the webserver, etc.

For a localized website with minimal traffic, opting for a CDN may create additional and unnecessary hops for servicing a user request, thus increasing the latency. Again, applying the above-mentioned optimization may be more effective.

How does a Content Delivery Network (CDN) work?

Every request from a web browser hits the DNS (Domain Name Service) first. The DNS directs the request to the CDN hub that is responsible for the corresponding domain management. The CDN hub determines the “closest” server via load balancing techniques such as:

- Geographical location – the closest server

- Provider’s preference – cheaper server from a processing cost perspective

- Server load – if the closest server is at its full capacity, the second closest could provide a faster response

While the dynamic content required for responding to the user request is obtained from the Main Server, the static content required is downloaded from the nearest edge server, determined by the CDN hub.

Edge servers’ internal workings are similar to that of a browser cache. I.e. upon reception of a request, the cache is searched for the required data/content. If the search is futile and the server is unable to find the data in the cache, it reaches out to the Origin Server for the data.

The Origin Server is the point of truth for all the data that the website/CDN provides. The response from the Origin Server is saved/cached by the edge server for serving any future requests. Hence, the performance metric for any Content Delivery Network can be the percentage of requests, that it is able to respond to, with the corresponding static content out of the edge servers’ cache.

This brings us to a series of questions:

How do we decide what content must be placed on a CDN vs what must be handled by the Origin Server?

Do we store a copy of all the data at all Edge Servers? (or) is data stored based on usage statistics of the corresponding user base within the server’s vicinity?

The central idea is to store copies of the content that does not change frequently on a CDN. I.e. Static content. Any data that must be dynamically provided based on the request information such as User Login, Form data, etc. must be handled by the Origin Server.

The CDN hub determines what pieces of content is most accessed by a particular hub and caches it at the edge location with TTL (Time To Live). If the hub determines that particular content is gaining popularity across multiple edge locations then it pushes the content proactively to the edge. TTLs are managed at the POP.

So, most commonly, the final web response will be a combination of the static content retrieved from the edge server’s cache and the dynamic content retrieved via a request to the Origin Server.

How is the edge server’s cache maintained?

Since the edge servers are the most crucial part of a CDN network, it is important to maintain their cache effectively to make sure that the most frequently accessed and recently accessed data is always available for fast retrieval. Another important factor is to maintain the latest copy of the content on the edge server so that the user always gets the most current content.

Every object that is cached has an expiry (TTL – Time to Live) associated with it, and can even be forced out of the cache if required. The reasons for this forced elimination can be creating space for new content, the content is no longer valid, I.e. has been modified at the source or is no longer required, less used on a particular CDN node/edge server and many more.

A cached object can be deleted (removed completely) or invalidated (set the expiry date to past date) on an edge server. If the object is invalidated, the edge server will request the Origin Server for the updated/latest data if applicable. However, if the object is deleted, no such attempts will be made.

Hence, invalidation is the most commonly used approach as it enables the edge server to still be able to provide the expired/stale version of data while the latest content is being obtained.

Some of the most common cache invalidation strategies are:

- Purge – Immediate removal of content from cache. When a request is made for the corresponding content, the same is fetched and stored in the cache.

- Refresh – Fetching the new version of content from the origin server, even if the corresponding content is available

- Ban – Fetching the new version content from the origin server if the corresponding content is referenced in a “ban” list. This method does not involve removing the content from the cache beforehand, rather the content is updated when a user request is received.

Latest developments in CDN technology

Here are some of the latest advancements in CDN technology.

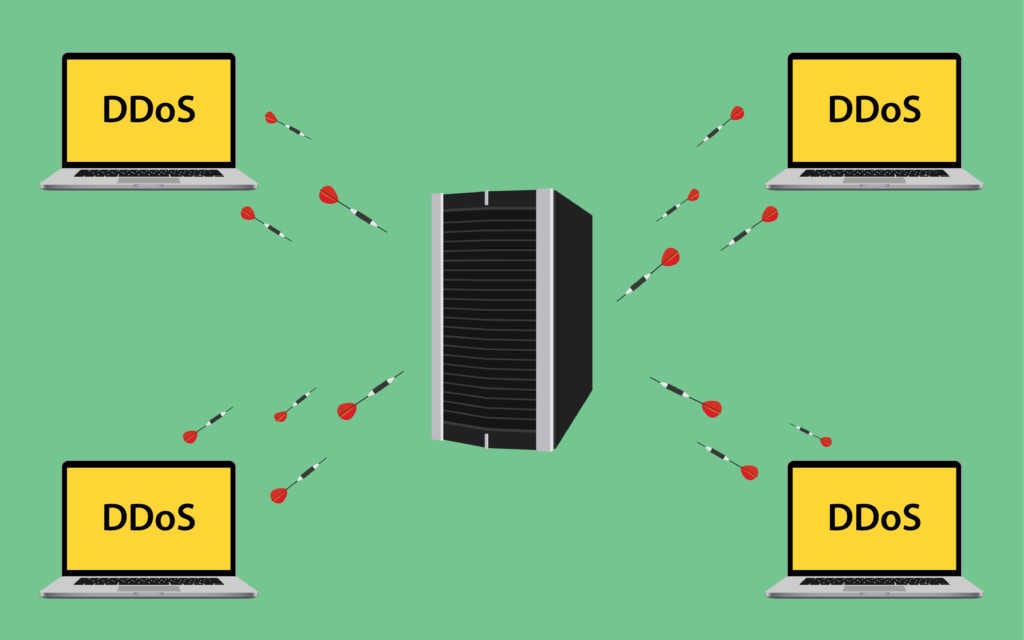

DDoS protection

Distributed Denial of Service (DDoS) attack is one of the chief threats for a CDN network. It involves the usage of malicious software to direct several thousands of machines/computers to send dummy requests to a specific target, with an intention of slowing down the network and making it unavailable to genuine user requests. This is harmful to the website’s reputation. The CDN providers dealing with Live video streaming and Video On Demand services are particularly affected by this threat, as it results in a very degraded online video quality.

In this age and era, hackers find innumerable intelligent ways to break security systems and firewalls. It is very difficult to differentiate between a legitimate request from spam, with so many different points of origin. Under these conditions, it is quite a challenging task for the CDN providers to provide protection against these DDoS attacks.

DDoS attacks can target the application layer (Layer 7 of the OSI model) to disable specific functionalities of a website. E.g. search function, add a friend, upload photo, etc. These attacks are often used as a point of distraction with an underlying intention to facilitate an unmonitored security attack. DDoS attacks also target network-level damage, which is usually accompanied by an application layer attack. Huge volumes of requests can be sent to saturate the bandwidth, resulting in a server-down scenario.

Fortunately, Content Delivery Network – CDN providers have been able to frame strategies to identify and block these attacks.

Identifying that a network or a website is under attack involves continuously looking for signs including, but not limited to:

- The number of requests from a single IP is more than a common threshold. Usually Requests Per Second (RPS)

- The number of requests vs time taken to send the requests. E.g. If 68 requests are received in 1 minute from the same IP, there is definitely something fishy.

- Website average response times

- Log analysis – checking for unexpected traffic surges

- Receiving an exceeding number of SYN packets

- Different IPs with the same packets of data

There can be monitoring scripts setup, to look for these signs and raise an alarm via email to a support group.

Once the attack is identified, the next hurdle is to mitigate the impact on the application. This is difficult because separating the legitimate requests from the attack is not an easy task. Some of the approaches are:

- Block IP addresses automatically – a blacklist. This can be obtained via a log analysis

- Blocking brute force attacks – identify the IP addresses based on RPS and block them if the RPS indicates an attack like behavior

- Block requests based on packet size, length and more

Akamai(a leading CDN provider) offers a service called “Kona DDoS defender” that provides protection against DDoS attacks. It addresses situations where an application/website wants to be prepared for any DDoS attacks or is already under attack. Kona DDoS defender has the capability to stop the attacks at the edge before they reach the application, thus ensuring no negative impact on the website performance. Network layer attacks such as SYN floods are automatically deflected. It performs real-time analysis of the ongoing attacks and tunes rules/create custom rules to adapt to the attack traits. The protection is called a “Broad Protection”, as it can cope up with changing size and complexity of the attack. You can find more information in detail here.

Content Delivery Network for DevOps

Gone are the days when production deployments were tedious and we had pre-determined deployment plans for the whole quarter or even a year. Today, the average time gap between deployments is in seconds! This highly agile environment, demands capabilities like updating content, recalling content and deploying new versions within no time. I.e. effective cache management is required to form the CDNs. For example, a website posts an article on “10 reasons why you should hate Harry Potter” and after an hour, if the report/data analysis says that more than 80% of the users are abandoning the website within 10 seconds, the article must be recalled. Similarly, consumers want the latest game scores, election poll results, Live telecasts, Movie reviews, Flash news, etc. I.e. basically the world wants to see things as they happen.

The DevOps teams must be able to push new features, new content, analyze any performance issues such as slow image loading, frustrating video buffering, etc. to be able to fix the same and re-deploy in no time.

CDNs today provide outstanding support to the DevOps teams to enable them in keeping up the desired pace. Here are some of the capabilities:

Real-time analytics

Support troubleshooting at most drilled down level, API based analytics support

Optimal caching strategies

Faster invalidation request propagation, faster change roll out, faster purge times, automatic optimization to support ad hoc content deployments

Mitigating security threats

Mainly DDoS protection

All these features can be accessed and controlled via a highly interactive UI and/or via API calls.

Edge Computing and IoT

So far we have seen how CDNs maintain static content at the edge for quick data retrieval and latency reduction. The next step in this journey is to grant computational power to the edge – Edge Computing! Low latency could not have been more important than it is now. The older the data gets, the lesser it’s value becomes.

In a typical IoT use case, an edge device such as a sensor collects all the request data and pushes it to a cloud/Data Center for computation/processing. With Edge Computing, some of the data is locally processed, resulting in the reduction of traffic and latency and an improved Quality of Service(QoS). This is ideal for several industries today, such as medical, financial, manufacturing etc.

LimeLight (a leading CDN provider), offers services to manage the growth of IoT data, that enable local computation of data. It has a high-speed private network, isolated from the public network, providing enhanced performance and security. By strategically positioning the PoPs around the globe, it eliminates latencies induced due to data transfer to distant Data Centers. The “Edge Analytics” and distributed services bring the computational power closer to the data source and ensure a reduced cost, complexity and latency. With the “Distributed Object Storage”, data can be quickly stored in a local PoP, rather than being transferred to centralized cloud storage.

It is also important to understand the security aspects of granting the computational power to the edge servers. One point of view is that it is more secure to process data at the edge, closer to its source, when compared to transferring it over a network, where it is vulnerable. Another point of view is that the peers in the edge network must take high precautions when dealing with sensitive data without the involvement of the Origin Server. Hence, advance security mechanisms/encryption techniques must be applied to secure edge computing.

Last Mile Acceleration in Content Delivery Network – CDN

“Last Mile” is a phrase used popularly in Telecommunications and the Internet. It refers to the part of the network that physically connects to the end user’s premises. This also determines the performance metrics of a CDN. I.e. it measures how quickly the content is delivered to a user, including the last piece of connectivity – ISP to the Customer device. Content delivery usually slows down at the last mile, especially for users browsing through a mobile device.

“Last Mile Acceleration” involves accelerating data in the last mile via compression techniques. If the data/content provided by the Origin server is un-compressed, compression is applied at the edge server to achieve low latency. If compressed data is received from the Origin server, it is transmitted as-is.

Akamai offers “Last Mile Acceleration” as a setting that CDN customers can enable if they want to use the feature.

Adaptive Content Delivery

This deals with delivering content adapting to several elements such as:

- Customer’s available bandwidth

- Predicting future content needs/access

- Content-specific CDN service – Mobile, Video, and more!

- The idea encapsulates services such as Adaptive Bitrate Streaming and Adaptive acceleration that target to meet the changing user/device/network needs to ensure a stable and increased user engagement.

Adaptive Bitrate Streaming (ABR) deals with providing a high-quality experience for different network types, different connection speeds, different resolutions etc. The user’s bandwidth is monitored in real-time and the quality of the video stream is adjusted to suit that. This enables an interruption less service to the customer due to constant adaptation to the factors that impact flawless content delivery.

Adaptive Acceleration deals with monitoring the users in real-time and enables smart content caching, I.e. caching content that has a higher likelihood of being accessed by the users in the near future. This approach reduces turn-around times, latency, and the network load very much.

Akamai offers this service too as a feature that can be turned on as per the CDN customer’s preference. This feature works in two ways: Automatic Server Push and Automatic Pre-Connect. “Automatic Server Push” enables the server to send multiple responses to a single request. I.e. References to the multiple items that the browser needs such as CSS, Javascript etc. are sent in the response. “Automatic Pre-Connect” enables the browser to pre-determine the connections it would need and establish them beforehand.

Smart Purging

Content freshness is one of the key success factors for a CDN. Not only is it important for content to be fresh, but also to have an easy way to maintain the content freshness. For any CDN, managing content on a global network of PoPs is a difficult task. Hence, if the cache management tasks such as purging take a lot of time, content freshness cannot be ensured. This can result in issues such as broadcasting old content, incorrect files download etc. which can be really serious for industries such as Medical and Financial.

LimeLight introduced “Smart Purge”, which is a next-generation purging technique, that executes fast purges across the global network. It provides features such as the capability to purge based on pattern matching, collecting statistics on the data to be urged – mock run, cache invalidation and much more.

You can find detailed information on Smart Purge here.

HTTP2 and IPV6 support with Content Delivery Network – CDN

HTTP/2 is the revised version of HTTP, which does not alter the underlying protocol and supports the same methods, status codes etc. The focus of the revision is on enhancing the performance and reduce the end-user perceived latency. It goes without saying that Content Delivery Network – CDN is a major use case for the revised protocol, due to its focus. Major CDN companies such as Akamai (the first one to support), Cloudflare, AWS CloudFront etc. support HTTP/2 today. It has an extensive list of benefits such as multiplexing, concurrency, header compression, stream dependencies, server push, improved encryption and much more! All these features enable advanced capabilities such as:

- Requests can be sent in rapid succession and responses can be received in any order. Due to this, multiple connections between client and server are not required.

- Resource priorities can be passed to the Server

- Significant reduction in the HTTP request header size

- The server can push the resources that haven’t been requested by the client yet

Applications designed to take advantage of these new capabilities must also take caution to maintain a balance between performance and utility.

IPV6 is the most recent version of the Internet Protocol (IP), with a goal of dealing with the IPV4 address exhaustion problem. The CDN companies are making sure that the customers have a smooth transition in deploying the IPV6 technologies. This will enable the customers to reach the end-users via a IPV4/IPV6 hybrid network while ensuring performance and security.

How do customers get charged/billed for CDN usage

Customers are billed for Content Delivery Network – CDN usage based on the billing region, such as USA, Europe, Japan etc. The billing region is not based on the physical location of the request origination. It is based on the location of the POP. The requests are categorized based on http response types (success/failure). Header-only (e.g. 304 Not Modified), error responses (e.g. 404 Not Found) etc. are billable but result in minimal charges as they contain negligible payload. HTTP and HTTPS requests may have different pricing options associated to them.

Billing also depends on the GB used for storage of content and amount of data transferred to main the CDN cache. Setting longer TTLs against cache data is one of the ways to reduce billing via reducing the frequency of data transfers required for cache maintenance. One of the pricing models for CDN billing is Per-GB i.e. for websites with a good estimation of the usual bandwidth consumption and frequent traffic surges, this can be a suitable option. This way the billing is based on the amount of GB consumed without additional costs for peak load times.

Utilization of any CDN services such as Data encryption and Custom SSL certificates will incur additional costs.

Major CDN Players and comparison – Akamai VS LimeLight

Some of the Major CDN players are Akamai, LimeLight, Incapsula, MaxCDN, Cloudflare and AWS. We have been looking at some of the features that Akamai and LimeLight offer through the latest developments in CDN area. Below is a comparison of Akamai and LimeLight CDNs.

Both have highly developed global networks and provide CDN platforms that ensure efficient and fast data transfer. Akamai is one of the oldest Content Delivery Networks and is considered a global leader. LimeLight has been a strong competitor since 2001.

When comparing different Content Delivery Networks, the below capabilities/features are some of the key deciding factors:

- Content Delivery Features – e.g. Video on Demand, image optimization, DDoS protection, Data compression etc.

- POPs

- Cache maintenance/invalidation strategies supported

- Setup complexity

- Security

- Analytics

- Customization support

- Load balancing technology

- Pricing

- Advanced CDN feature provision – DevOps support, Last Mile acceleration etc. as mentioned above

Both Akamai and LimeLight support all the major features and latest advancements in the CDN domain. Here is an overview of the comparison:

- They both support Video on Demand, DDoS Protection, Data compression etc. LimeLight does not support Image Optimization

- From a POP network point of view, Akamai has 100,000 servers located in over 80 countries and LimeLight has 80 POPs in countries including the USA, Germany, India, UAE, Korea, Singapore, and Japan

You can refer to the detailed comparison provided by CDN Planet here.

Conclusion

Content Delivery Networks administer over half of the world’s internet traffic and ensure that the users get a seamless and enriched web experience. Content is made available in the blink of an eye via edge network setup, effective caching, reliable security techniques and adaptive streaming.

It is very easy to grasp the concept of a CDN, but very difficult to master it and understand the layers of complexity, to make the most out of it.

All in all, without CDNs, we would all be staring at our computer screens in star wars alike setting and waiting for a response “from a server far far away!”

This post is authored by Sindhura Gade who is a Software Engineer and a big data Enthusiast and was reviewed by Anup Gautam a Solution Architect at Akamai. This post is part of the collaborative blogging platform initiative by Mario Gerard.